The rise of artificial intelligence (AI) in education has brought about a revolution in how student assessments are conducted. However, with this technological leap comes a set of ethical dilemmas that educators and institutions must address. Are we ready to trust AI with such a pivotal role in shaping young minds? Can AI truly be unbiased and fair in assessing students? In this article, we’ll dive into the ethical considerations surrounding AI in student assessment, exploring both the potential benefits and the risks. Let’s explore the landscape of AI ethics in education and what it means for the future of student assessments!

Key Takeaways

- AI is revolutionizing student assessments, offering efficiency but also ethical challenges.

- Addressing AI bias is crucial to ensure fairness and equity in education.

- Transparency and accountability are vital in AI-driven assessments.

- Protecting student privacy is a top priority in the AI integration process.

- Automated decision-making in education requires careful ethical consideration.

- Following best practices ensures ethical AI use in student assessments.

The Role of AI in Modern Student Assessment

Artificial Intelligence (AI) is revolutionizing education by reshaping how student assessments are conducted. With AI, assessments are becoming more efficient, scalable, and personalized, providing tailored feedback that caters to individual student needs. However, integrating AI into student assessment is challenging, particularly in the context of AI ethics in student assessment.

- AI is increasingly used to grade assignments, quizzes, and even essays, offering immediate feedback that can enhance the learning process.

- Efficiency is one of AI’s greatest strengths, allowing for the quick processing of large volumes of assessments, which would be overwhelming for human educators.

- AI-powered tools, such as adaptive testing platforms, can adjust the difficulty of questions based on student performance in real time, providing a more accurate measure of student abilities.

Yet, as AI continues to take on a more significant role in student assessment, it’s essential to consider the ethical implications. The efficiency and scalability of AI are impressive, but they also raise questions about the fairness and accuracy of these assessments. AI systems, while powerful, are not infallible, and the potential for errors and biases in grading cannot be ignored.

For example, AI tools like Turnitin and Gradescope are widely used in educational institutions. These tools streamline the grading process, but they also highlight the need for transparency and accountability. As educators and institutions adopt these technologies, they must ensure that AI systems are used ethically, with a focus on enhancing student learning rather than merely automating processes.

AI ethics in student assessment should always be a priority when integrating these technologies. As schools continue to adopt AI-powered assessment tools, the balance between leveraging AI’s benefits and addressing its ethical challenges will be critical. This involves not only understanding the potential risks but also implementing strategies to mitigate them.

For those interested in further exploring the integration of AI ethics into education, our guide on Integrating AI Ethics in STEM: A Guide for Educators offers valuable insights.

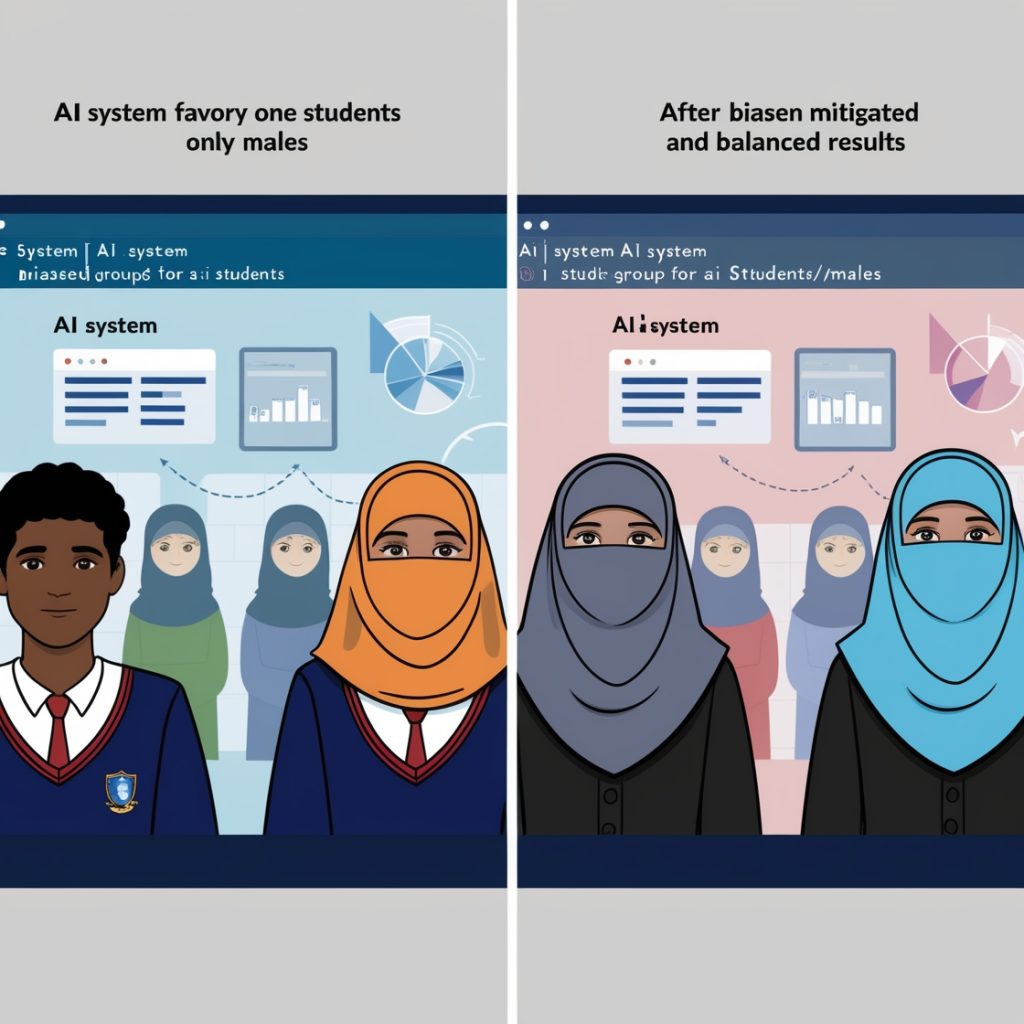

Fairness and Bias in AI Assessments

AI in education offers many benefits, but it also comes with significant risks, particularly concerning fairness and bias. AI systems, by nature, learn from data, and if that data is biased, the assessments they produce can be biased as well. This is a critical consideration in AI ethics student assessment, as the implications of biased assessments can have lasting effects on students.

- Bias in AI algorithms can manifest in various ways, such as favoring certain demographic groups over others, which can continue existing inequalities in education.

- There have been numerous case studies where biased AI assessments have negatively impacted students, highlighting the importance of addressing these issues proactively.

- To mitigate bias in AI systems, it is crucial to ensure that the data used to train these algorithms is diverse and representative of the student population.

Addressing bias is not just about fixing the algorithms; it requires a broader commitment to fairness and equity in education. Schools must be vigilant in monitoring AI systems and be prepared to intervene when necessary to ensure that all students are assessed fairly.

One way to combat bias is by implementing strategies that focus on the continuous evaluation and adjustment of AI systems. This includes regular audits of AI tools to identify and correct biases, as well as involving a diverse group of educators and students in the development and deployment of these systems.

It’s also essential for educators to understand the limitations of AI and to maintain human oversight in the assessment process. While AI can provide valuable insights, it should not be the sole determinant of a student’s abilities or future. Educators must balance AI’s capabilities with their judgment to ensure that assessments are both fair and accurate.

For more on how to combat bias in AI, check out our article on Combating AI Bias in Education: Key Strategies.

Transparency and Accountability in AI-Driven Assessments

Transparency and accountability are critical components of ethical AI use in education. In AI ethics student assessment, these principles ensure that AI systems are used responsibly and that their decision-making processes are open to scrutiny.

- AI systems should be transparent in how they reach their conclusions, allowing educators, students, and parents to understand the factors influencing assessments.

- Accountability is essential in AI-driven assessments, as it ensures that there is a clear process for addressing any issues that arise, such as errors or biases in grading.

- Educators play a crucial role in overseeing AI-based assessments, ensuring that these tools are used in a way that supports student learning and development.

Transparency in AI decision-making processes means that AI tools should not operate as “black boxes.” Stakeholders need to understand how AI algorithms function and what data they use to make decisions. This transparency builds trust in AI systems and allows for more informed discussions about their use in education.

Accountability is equally important. When AI systems make mistakes, there must be a clear path to correct these errors. This might involve allowing students to appeal their AI-generated grades or providing educators with the tools to override AI decisions when necessary.

Legal and ethical frameworks guide AI transparency and accountability in education. These frameworks help schools navigate the complexities of AI ethics student assessment, ensuring that AI tools are implemented in ways that respect students’ rights and promote fair and accurate assessments.

For educators looking to enhance their understanding of transparency in AI, our article on Digital Citizenship in AI 10: Navigating Ethical Challenges provides practical advice.

Privacy Concerns in AI-Driven Student Assessments

Student data privacy is a significant concern when using AI in education. AI-driven assessments often require access to large amounts of data, raising important questions about how this data is used and protected. In the context of AI ethics student assessment, protecting student privacy is paramount.

- AI systems use various types of student data, including academic records, behavioral data, and even personal information, to generate assessments.

- These data points, while useful for creating personalized assessments, pose significant privacy risks if not managed correctly.

- Educators and institutions must adopt best practices for protecting student data, ensuring that privacy is not compromised in the pursuit of AI-driven efficiency.

One of the main challenges is finding the right balance between data utility and privacy. While AI can provide valuable insights into student performance, this should not come at the expense of students’ privacy rights. Schools must implement robust data protection measures to ensure that student information is secure and that any data used by AI systems is handled responsibly.

Best practices for protecting student data include anonymizing data where possible, limiting access to sensitive information, and regularly reviewing data protection policies to keep up with evolving threats. It’s also important for schools to be transparent with students and parents about how their data is being used and to give them control over their data.

Privacy is a key aspect of AI ethics in education, and educators must be proactive in addressing these concerns. By adopting a privacy-first approach, schools can ensure that AI systems enhance student learning without compromising their privacy.

For a deeper dive into student privacy concerns, see our AI Student Privacy: Essential Guide for Educators.

Ethical Implications of Automated Decision-Making in Education

Automated decision-making is at the heart of AI’s role in education, but it also raises significant ethical concerns. AI ethics student assessment must consider the impact of these automated decisions on student outcomes, particularly when these decisions can affect a student’s academic trajectory.

- AI decisions can have a profound impact on student outcomes, from grading to determining eligibility for advanced programs.

- There is an ongoing debate about the role of human vs. AI judgment in education, with some arguing that AI should only assist, not replace, human decision-making.

- Ethical concerns also arise when human oversight is removed from the assessment process, as this can lead to decisions that are difficult to challenge or reverse.

The debate over AI in education often centers around the tension between efficiency and ethical responsibility. While AI can streamline decision-making processes, there is a risk that these decisions may not fully account for the complexities of individual student circumstances. This is why it’s essential to maintain a balance between AI automation and human oversight.

One of the most critical ethical implications of automated decision-making is the potential for AI to make decisions that are difficult to understand or challenge. For example, if an AI system incorrectly assesses a student’s abilities, it may be challenging for the student to contest the decision without a clear understanding of how the decision was made.

The future of AI in education likely lies in human-AI collaboration, where AI supports educators rather than replacing them. This approach ensures that AI’s strengths are harnessed while maintaining the critical role of human judgment in the education process.

For educators interested in exploring the ethical implications of AI further, our guide on Teaching AI Ethics in K-12: A Guide for 2024 & Beyond offers practical insights.

Best Practices for Ethical AI Use in Student Assessment

To navigate the complex landscape of AI ethics student assessment, educators and institutions must adopt best practices that prioritize ethical considerations. These practices ensure that AI is used in ways that benefit students and uphold the integrity of the educational process.

- Guidelines for ethical AI implementation include ensuring that AI tools are used to support, not replace, human educators and that they are deployed in ways that promote fairness and transparency.

- Continuous monitoring and evaluation of AI systems are crucial to identify and address any issues that arise, such as biases or inaccuracies in assessments.

- Involving students and parents in discussions about AI assessments helps build trust and ensures that all stakeholders understand how AI is being used.

Building an ethical framework for AI in education is not just about following rules—it’s about creating an environment where AI supports positive educational outcomes. This involves ongoing education and training for educators, as well as clear communication with students and parents about the role of AI in their education.

Monitoring AI systems is an essential part of ethical AI use. Regular audits and evaluations help identify potential problems before they impact students, ensuring that AI systems remain fair and accurate. Schools should also be prepared to make adjustments to AI tools as needed, based on feedback from educators and students.

Involving students and parents in discussions about AI is another best practice that promotes transparency and trust. By engaging with these stakeholders, schools can ensure that AI tools are used in ways that are understood and accepted by those they impact the most.

For a comprehensive guide on ethical AI implementation, see our article on Digital Citizenship in AI 10: Navigating Ethical Challenges.

Conclusion

As AI continues to influence student assessments, it’s crucial to address the ethical challenges head-on. Ensuring fairness, transparency, and privacy is not just an option—it’s a necessity. By following best practices and remaining vigilant about potential biases and privacy concerns, educators can harness the power of AI while safeguarding the integrity of the educational process. What steps will you take to ensure that AI serves as an ethical and effective tool in student assessment?